| Target | 0.0001 | 0.001 | 0.01 | 0.1 | 1 |

|---|---|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| Target | 0.0001 | 0.001 | 0.01 | 0.1 | 1 |

|---|---|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| Target | Vanilla GAN | StyleGAN |

|---|---|---|

|

|

|

|

|

|

|

|

|

| Target | $z$ | $w$ | $w+$ |

|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

| Source | $z$ | $w$ | $w+$ | Target |

|---|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

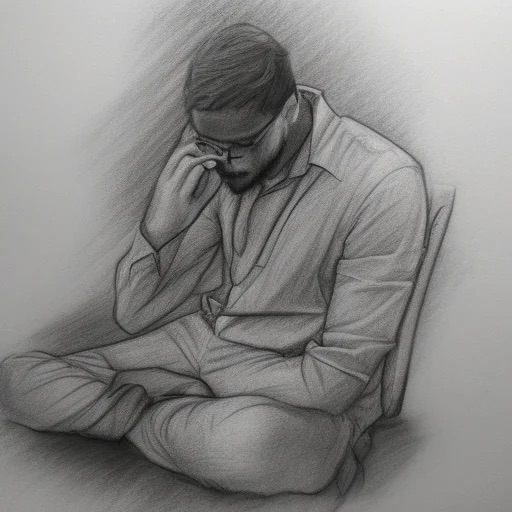

| Scribble | $w$ | $w+$ |

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| Sketch & Prompt | Result 1 | Result 2 |

|---|---|---|

A cute cat, fantasy art drawn by disney concept artists

|

|

|

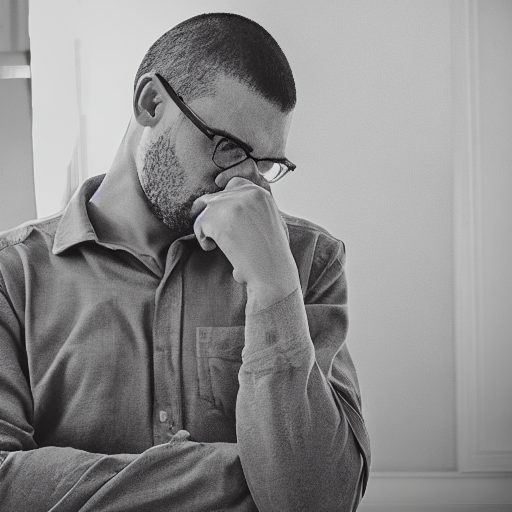

A man thinkng about his homework

|

|

|

A rooster in a farm, photorealistic

|

|

|

A fantasy landscape with a river in the middle

|

|

|

A human character fights a monster with lightsaber in a video game

|

|

|

| Target (128') | $w$ | $w+$ |

|---|---|---|

|

|

|

|

|

|

|

|

|

| Target (256') | $w$ | $w+$ |

|---|---|---|

|

|

|

|

|

|

|

|

|